PrivacyDocs comes with a specially taught AI system that users can access to perform two tasks:

- Chat with a virtual compliance expert about compliance gaps, recommended actions, etc., and

- Check compliance texts.

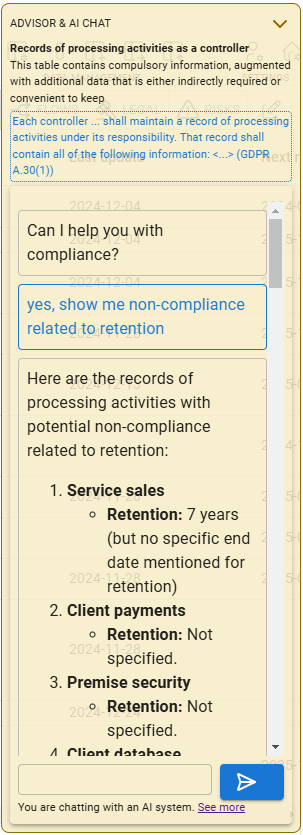

Compliance Chat

Use the yellow instant advisor screen on the right-bottom corner to get infromation about the current data table or a data element, but also use it to chat with the AI chatbot.

You can ask anything about your compliance; the chatbot is aware of all your compliance documentation, the GDPR and the AI Act, augmented with elaborated instructions provided by PrivacyDocs.

Try to be very specific when talking to the chatbot. Use the following examples:

- List processing activities that are not compliant?

- Recommend retention schemes for the incompliant processing activities.

- Which activities are not reviewed in a year?

- Suggest key DPIA items for processing activity 'marketing emails'

- Generate a privacy statement for web-facing activities

Chatbot advises are often remarkably useful, but they should always be critically reviewed.

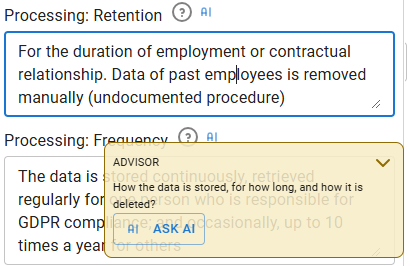

Checking Compliance Texts

Quite often, in-house GDPR experts find it difficult to write specific pieces of GDPR compliance texts. The texts need to answer specific questions from the privacy domain that are counter-intuitive to many. Specific aspects need to be covered in the texts, and a certain kind of language needs to be used.

PrivacyDocs offers the possibility to have your texts checked by a specially trained AI system that is aware of specific GDPR requirements for each piece of text, and can advise or suggest improvements to the texts.

The AI system is a text analyzer, and it is only enabled for data fields that contain textual information. The fields that contain references to other fields or dates are validated with the compliance advisor. The AI system needs at least a few words of text to work.

When AI is enabled for a field, the following 'Ask AI' icon appears next to the field edit box:

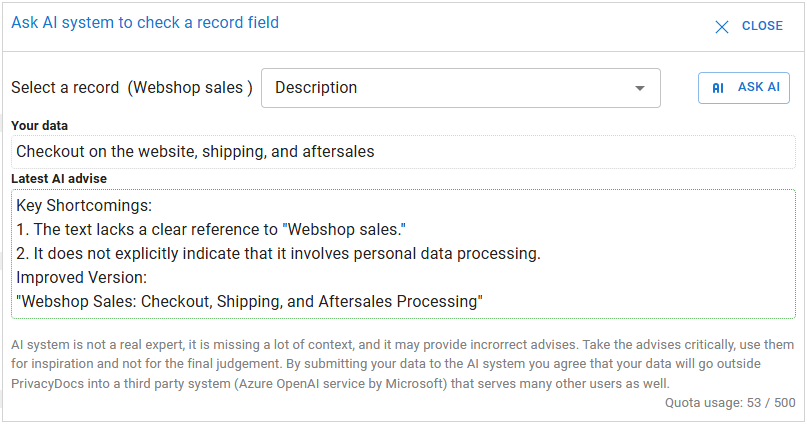

When clicked, the 'Ask AI' dialog is shown, which looks like the following:

For human expert advice you can always request the PrivacyDocs GDPR consultancy service.

The EU AI Act

All companies using AI tools are subject to the EU AI Act (The Artificial Intelligence Act, Regulation 2024/1689) that regulates how AI systems are used, developed or procured. In particular, the usage of the 'high risk' AI systems is regulated. Using AI to develop compliance documentation is not considered 'high risk' and no additional requirements are imposed.

Read a special post about the EU AI Act here

What AI actually is?

Artificial Intelligence (AI) is a very broad discipline focused on understanding and replicating human behavior with computers. These days, by AI, people usually refer to the so-called Generative AI (GenAI), a part of AI focused on generating texts, kind of replicating humans writing texts.

At some high level, AI is simple to understand. An AI (GenAI) system consists of a large table full of numbers called a Large Language Model, or LLM. This table is made from huge volumes of texts (about 40 billion pages) processed by tens of thousands of computers to compile it into a 2-100 GB file. It is made in such a way that, with a very small additional program, it can receive a few words from a human starting a sentence and complete it. A human may say 'Hello' and the LLM can complete it with 'How are you doing'.

In other words, it looks at all these texts and at each moment can suggest the next best word. A skill humans can only dream of, right?

The Gen AI models are very expensive to make. Next to collecting all these billions of pages, one needs to pay for the (expensive) servers that need to be continuously run for months to crunch the data. Each of these servers costs more than 1,000 Euro per month to rent, making the models cost millions of Euros to build.